It is quite common in Node Express backend development to come across a requirement of parsing and processing CSV files. Usually, it is to store the content in the database after processing.

In this post, we will upload a CSV file, store it temporarily in the server directory to fetch all rows in JSON form, push all rows in an array, and remove the temporary file.

At the end of this post, we’ll discuss a workaround as well.

Let’s jump straight into it. Here are the steps:

Create an index.js file for a simple express server. Include npm packages:

fshttpmulterfast-csvexpress

(install with npm install by including the packages in dependency as shown in the end or install them by running the npm install --save multer express fast-csv command. fs and http comes with node, so no need of installation.)

Also, create Router variable.

const http = require('http');

const fs = require('fs');

const express = require('express');

const multer = require('multer');

const csv = require('fast-csv');

const Router = express.Router;

Define multer upload directory ‘tmp/csv/’ as the destination for CSV files to be uploaded temporarily and add definitions for the express server app.

const upload = multer({ dest: 'tmp/csv/' });

const app = express();

const router = new Router();

const server = http.createServer(app);

const port = 9000

Define upload-csv endpoint with app.use and add post route. Include upload.single('file') as the second argument

router.post('/', upload.single('file'), function (req, res) {

});

app.use('/upload-csv', router);

Add code to start the server at the end:

function startServer() {

server.listen(port, function () {

console.log('Express server listening on ', port);

});

}

setImmediate(startServer);

Now on hitting /upload-csv endpoint with a CSV file, req.file will contain the uploaded file information. We’re only interested in req.file.path, passing which to csv’s fromPath method opens the file and returns one row at a time on data event in JSON form.

As we’re interested in processing data only and not the file itself, we push each row in an array to remove the temporary file later.

const fileRows = [];

csv.fromPath(req.file.path)

.on("data", function (data) {

fileRows.push(data); // push each row

})

Another event end is fired when all rows are returned in data event callback. Since we have stored them in the array, we can safely remove the temporary file. We use fs.unlinkSync.

const fileRows = [];

csv.fromPath(req.file.path)

.on("data", function (data) {

fileRows.push(data); // push each row

})

.on("end", function () {

console.log(fileRows) //contains array of arrays. Each inner array represents row of the csv file, with each element of it a column

fs.unlinkSync(req.file.path); // remove temp file

//process "fileRows" and respond

})

And that’s it! For validation of uploaded CSV data, check Validate Uploaded CSV Data In Node Express.

Putting server code and package.json for npm packages all together:

index.js

'use strict';

const http = require('http');

const fs = require('fs');

const express = require('express');

const multer = require('multer');

const csv = require('fast-csv');

const Router = express.Router;

const upload = multer({ dest: 'tmp/csv/' });

const app = express();

const router = new Router();

const server = http.createServer(app);

const port = 9000

router.post('/', upload.single('file'), function (req, res) {

const fileRows = [];

// open uploaded file

csv.fromPath(req.file.path)

.on("data", function (data) {

fileRows.push(data); // push each row

})

.on("end", function () {

console.log(fileRows)

fs.unlinkSync(req.file.path); // remove temp file

//process "fileRows" and respond

})

});

app.use('/upload-csv', router);

// Start server

function startServer() {

server.listen(port, function () {

console.log('Express server listening on ', port);

});

}

setImmediate(startServer);

package.json

{

"name": "csv-upload",

"main": "index.js",

"dependencies": {

"express": "^4.16.3",

"fast-csv": "^2.4.1",

"multer": "^1.3.0"

}

}

Workaround

Before committing to the above implementation, you should ask yourself if sending the CSV file to the backend is necessary. If not (which in most cases isn’t), you could simply convert the CSV file on the frontend and send the JSON data to the backend, where you will treat the CSV file content as any other JSON for processing and storing.

One helpful library for that is papaparse. You can include it in your project with a script tag:

<script src="https://cdnjs.cloudflare.com/ajax/libs/PapaParse/5.3.1/papaparse.min.js"

integrity="sha512-EbdJQSugx0nVWrtyK3JdQQ/03mS3Q1UiAhRtErbwl1YL/+e2hZdlIcSURxxh7WXHTzn83sjlh2rysACoJGfb6g=="

crossorigin="anonymous" referrerpolicy="no-referrer"></script>

Or, if using npm, npm i papaparse.

Following is a simplified code in pure HTML/JavaScript (must include the above script) for selecting and converting a CSV file to JSON:

<input id="csv" name="csv" type="file" accept=".csv">

<script>

var fileInput = document.getElementById('csv');

fileInput.addEventListener('change', function (event) {

var csvInput = event.target;

var file = csvInput.files[0];

Papa.parse(file, {

complete: function (results) {

console.log(results.data);

// process the JSON

}

});

});

</script>

On the complete callback, you can process and send the JSON in results.data to the backend.

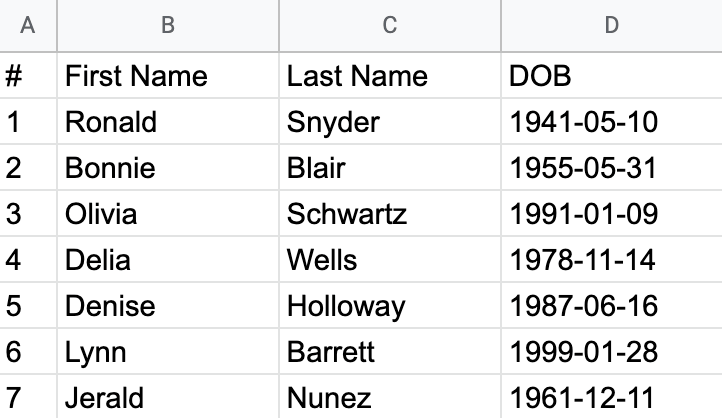

For a sample CSV file selected this way, the logged JSON on the frontend looks like this:

[

[

"name",

"roll no",

"dob"

],

[

"John Smith",

"4012",

"1995/12/12"

],

[

"Lien Beaston",

"4013",

"1995/10/22"

],

[

"Tanisha Mcneel",

"4014",

"1996/07/01"

],

[

"William Gadison",

"4015",

"1996/05/30"

],

[

"Scott Mangold",

"4016",

"1995/02/11"

],

[

"Roland Benz",

"4017",

"1995/11/20"

],

[

"Jan Elkins",

"4018",

"1996/01/10"

],

[

""

]

]

This workaround offloads the heavy processing of all the possible CSV file uploads to the users' browsers, saving the server a lot of valuable CPU power on the backend.

Now, we don’t need multer or fast-csv, and we can reduce our API endpoint to:

router.post('/', function (req, res) {

console.log(req.body.csvJSON); // "csvJSON" is what you will send from the frontend

});

app.use('/upload-csv', router);

To export the MongoDB collection data to CSV, check Export Mongodb Collection Data In CSV From Node Express Mongoose Application.

See also

- Preventing Privilege Escalation in NoSQL & SQL: Secure Node.js Practices

- Node JS Mongo Client for Atlas Data API

- SignatureDoesNotMatch: The request signature we calculated does not match the signature you provided. Check your key and signing method.

- Exactly Same Query Behaving Differently in Mongo Client and Mongoose

- AWS Layer: Generate nodejs Zip Layer File Based on the Lambda's Dependencies

- In Node JS HTML to PDF conversion, Populate Images From URLs

- Convert HTML to PDF in Nodejs